-->

- Network Adapter, or Local Area Network Adapter that we know as LAN. At starting releases of this NIC there are separate NIC that consisted of a card that is connected with the motherboard of the computer. And an Rj45 connector is used to connect it with other PC. How to Configure the Microsoft Network Adapter Multiplexor protocol?

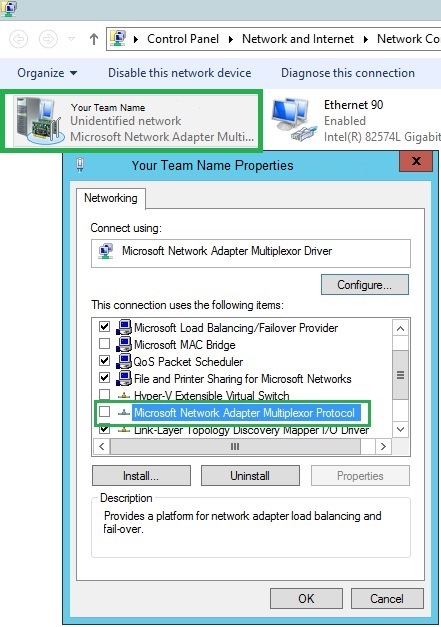

- The next step is attaching a Hyper-V switch to this team. Before we proceed, take a peek in your Network Connections settings again. You will see a new object for the NIC Team. Notice its Device Name is Microsoft Network Adapter Multiplexor Driver. This is the name of the device we will attach our Hyper-V virtual switch to in the next step.

- Additional adapters can be added to an existing team at any time. Every team and vNIC you create will be named and enabled via the Microsoft Network Adapter Multiplexor Driver. This is the device name that Hyper-V will see so name your teams intuitively and take note of which driver number is assigned to which team (multiplexor driver #2, etc).

Applies to: Windows Server (Semi-Annual Channel), Windows Server 2016

You can use this topic to learn some of the features of network adapters that might affect your purchasing choices.

Network-intensive applications require high-performance network adapters. This section explores some considerations for choosing network adapters, as well as how to configure different network adapter settings to achieve the best network performance.

Both hosts have two network adapters. On each host, one adapter is connected to the 192.168.1.x/24 subnet, and one adapter is connected to the 192.168.2.x/24 subnet. Test the connectivity between source and destination. Ensure that the physical NIC can connect to the destination host. May 20, 2019 I am trying unsuccessfully to enable the Microsoft Network Adapter Multiplexor Protocol in my Ethernet Properties for Realtek Gaming GbE Family Controller. I need this to reduce the drop-offs I get with several ethernet connected radios I use. The prompts ask for the disk, which I do not have (or do not know where it is in my computer).

Tip

You can configure network adapter settings by using Windows PowerShell. For more information, see Network Adapter Cmdlets in Windows PowerShell.

Offload Capabilities

Offloading tasks from the central processing unit (CPU) to the network adapter can reduce CPU usage on the server, which improves the overall system performance.

The network stack in Microsoft products can offload one or more tasks to a network adapter if you select a network adapter that has the appropriate offload capabilities. The following table provides a brief overview of different offload capabilities that are available in Windows Server 2016.

| Offload type | Description |

|---|---|

| Checksum calculation for TCP | The network stack can offload the calculation and validation of Transmission Control Protocol (TCP) checksums on send and receive code paths. It can also offload the calculation and validation of IPv4 and IPv6 checksums on send and receive code paths. |

| Checksum calculation for UDP | The network stack can offload the calculation and validation of User Datagram Protocol (UDP) checksums on send and receive code paths. |

| Checksum calculation for IPv4 | The network stack can offload the calculation and validation of IPv4 checksums on send and receive code paths. |

| Checksum calculation for IPv6 | The network stack can offload the calculation and validation of IPv6 checksums on send and receive code paths. |

| Segmentation of large TCP packets | The TCP/IP transport layer supports Large Send Offload v2 (LSOv2). With LSOv2, the TCP/IP transport layer can offload the segmentation of large TCP packets to the network adapter. |

| Receive Side Scaling (RSS) | RSS is a network driver technology that enables the efficient distribution of network receive processing across multiple CPUs in multiprocessor systems. More detail about RSS is provided later in this topic. |

| Receive Segment Coalescing (RSC) | RSC is the ability to group packets together to minimize the header processing that is necessary for the host to perform. A maximum of 64 KB of received payload can be coalesced into a single larger packet for processing. More detail about RSC is provided later in this topic. |

Receive Side Scaling

Windows Server 2016, Windows Server 2012, Windows Server 2012 R2, Windows Server 2008 R2, and Windows Server 2008 support Receive Side Scaling (RSS).

Some servers are configured with multiple logical processors that share hardware resources (such as a physical core) and which are treated as Simultaneous Multi-Threading (SMT) peers. Intel Hyper-Threading Technology is an example. RSS directs network processing to up to one logical processor per core. For example, on a server with Intel Hyper-Threading, 4 cores, and 8 logical processors, RSS uses no more than 4 logical processors for network processing.

RSS distributes incoming network I/O packets among logical processors so that packets which belong to the same TCP connection are processed on the same logical processor, which preserves ordering.

RSS also load balances UDP unicast and multicast traffic, and it routes related flows (which are determined by hashing the source and destination addresses) to the same logical processor, preserving the order of related arrivals. This helps improve scalability and performance for receive-intensive scenarios for servers that have fewer network adapters than they do eligible logical processors.

Configuring RSS

In Windows Server 2016, you can configure RSS by using Windows PowerShell cmdlets and RSS profiles.

You can define RSS profiles by using the –Profile parameter of the Set-NetAdapterRss Windows PowerShell cmdlet.

Windows PowerShell commands for RSS configuration

The following cmdlets allow you to see and modify RSS parameters per network adapter.

Note

For a detailed command reference for each cmdlet, including syntax and parameters, you can click the following links. In addition, you can pass the cmdlet name to Get-Help at the Windows PowerShell prompt for details on each command.

Disable-NetAdapterRss. This command disables RSS on the network adapter that you specify.

Enable-NetAdapterRss. This command enables RSS on the network adapter that you specify.

Get-NetAdapterRss. This command retrieves RSS properties of the network adapter that you specify.

Set-NetAdapterRss. This command sets the RSS properties on the network adapter that you specify.

RSS profiles

You can use the –Profile parameter of the Set-NetAdapterRss cmdlet to specify which logical processors are assigned to which network adapter. Available values for this parameter are:

Closest. Logical processor numbers that are near the network adapter's base RSS processor are preferred. With this profile, the operating system might rebalance logical processors dynamically based on load.

ClosestStatic. Logical processor numbers near the network adapter's base RSS processor are preferred. With this profile, the operating system does not rebalance logical processors dynamically based on load.

NUMA. Logical processor numbers are generally selected on different NUMA nodes to distribute the load. With this profile, the operating system might rebalance logical processors dynamically based on load.

NUMAStatic. This is the default profile. Logical processor numbers are generally selected on different NUMA nodes to distribute the load. With this profile, the operating system will not rebalance logical processors dynamically based on load.

Conservative. RSS uses as few processors as possible to sustain the load. This option helps reduce the number of interrupts.

Depending on the scenario and the workload characteristics, you can also use other parameters of the Set-NetAdapterRss Windows PowerShell cmdlet to specify the following:

- On a per-network adapter basis, how many logical processors can be used for RSS.

- The starting offset for the range of logical processors.

- The node from which the network adapter allocates memory.

Following are the additional Set-NetAdapterRss parameters that you can use to configure RSS:

Note

In the example syntax for each parameter below, the network adapter name Ethernet is used as an example value for the –Name parameter of the Set-NetAdapterRss command. When you run the cmdlet, ensure that the network adapter name that you use is appropriate for your environment.

* MaxProcessors: Sets the maximum number of RSS processors to be used. This ensures that application traffic is bound to a maximum number of processors on a given interface. Example syntax:

Set-NetAdapterRss –Name 'Ethernet' –MaxProcessors <value>* BaseProcessorGroup: Sets the base processor group of a NUMA node. This impacts the processor array that is used by RSS. Example syntax:

Set-NetAdapterRss –Name 'Ethernet' –BaseProcessorGroup <value>* MaxProcessorGroup: Sets the Max processor group of a NUMA node. This impacts the processor array that is used by RSS. Setting this would restrict a maximum processor group so that load balancing is aligned within a k-group. Example syntax:

Set-NetAdapterRss –Name 'Ethernet' –MaxProcessorGroup <value>* BaseProcessorNumber: Sets the base processor number of a NUMA node. This impacts the processor array that is used by RSS. This allows partitioning processors across network adapters. This is the first logical processor in the range of RSS processors that is assigned to each adapter. Example syntax:

Set-NetAdapterRss –Name 'Ethernet' –BaseProcessorNumber <Byte Value>* NumaNode: The NUMA node that each network adapter can allocate memory from. This can be within a k-group or from different k-groups. Example syntax:

Set-NetAdapterRss –Name 'Ethernet' –NumaNodeID <value>* NumberofReceiveQueues: If your logical processors seem to be underutilized for receive traffic (for example, as viewed in Task Manager), you can try increasing the number of RSS queues from the default of 2 to the maximum that is supported by your network adapter. Your network adapter may have options to change the number of RSS queues as part of the driver. Example syntax:

Set-NetAdapterRss –Name 'Ethernet' –NumberOfReceiveQueues <value>

For more information, click the following link to download Scalable Networking: Eliminating the Receive Processing Bottleneck—Introducing RSS in Word format.

Understanding RSS Performance

Tuning RSS requires understanding the configuration and the load-balancing logic. To verify that the RSS settings have taken effect, you can review the output when you run the Get-NetAdapterRss Windows PowerShell cmdlet. Following is example output of this cmdlet.

In addition to echoing parameters that were set, the key aspect of the output is the indirection table output. The indirection table displays the hash table buckets that are used to distribute incoming traffic. In this example, the n:c notation designates the Numa K-Group:CPU index pair that is used to direct incoming traffic. We see exactly 2 unique entries (0:0 and 0:4), which represent k-group 0/cpu0 and k-group 0/cpu 4, respectively.

There is only one k-group for this system (k-group 0) and a n (where n <= 128) indirection table entry. Because the number of receive queues is set to 2, only 2 processors (0:0, 0:4) are chosen - even though maximum processors is set to 8. In effect, the indirection table is hashing incoming traffic to only use 2 CPUs out of the 8 that are available.

To fully utilize the CPUs, the number of RSS Receive Queues must be equal to or greater than Max Processors. In the previous example, the Receive Queue should be set to 8 or greater.

NIC Teaming and RSS

RSS can be enabled on a network adapter that is teamed with another network interface card using NIC Teaming. In this scenario, only the underlying physical network adapter can be configured to use RSS. A user cannot set RSS cmdlets on the teamed network adapter.

Receive Segment Coalescing (RSC)

Receive Segment Coalescing (RSC) helps performance by reducing the number of IP headers that are processed for a given amount of received data. It should be used to help scale the performance of received data by grouping (or coalescing) the smaller packets into larger units.

This approach can affect latency with benefits mostly seen in throughput gains. RSC is recommended to increase throughput for received heavy workloads. Consider deploying network adapters that support RSC.

On these network adapters, ensure that RSC is on (this is the default setting), unless you have specific workloads (for example, low latency, low throughput networking) that show benefit from RSC being off.

Understanding RSC Diagnostics

You can diagnose RSC by using the Windows PowerShell cmdlets Get-NetAdapterRsc and Get-NetAdapterStatistics.

Following is example output when you run the Get-NetAdapterRsc cmdlet.

The Get cmdlet shows whether RSC is enabled in the interface and whether TCP enables RSC to be in an operational state. The failure reason provides details about the failure to enable RSC on that interface.

In the previous scenario, IPv4 RSC is supported and operational in the interface. To understand diagnostic failures, one can see the coalesced bytes or exceptions caused. This provides an indication of the coalescing issues.

Following is example output when you run the Get-NetAdapterStatistics cmdlet.

RSC and Virtualization

RSC is only supported in the physical host when the host network adapter is not bound to the Hyper-V Virtual Switch. RSC is disabled by the operating system when the host is bound to the Hyper-V Virtual Switch. In addition, virtual machines do not get the benefit of RSC because virtual network adapters do not support RSC.

RSC can be enabled for a virtual machine when Single Root Input/Output Virtualization (SR-IOV) is enabled. In this case, virtual functions support RSC capability; hence, virtual machines also receive the benefit of RSC.

Microsoft Network Adapter Multiplexor Driver Install

Network Adapter Resources

A few network adapters actively manage their resources to achieve optimum performance. Several network adapters allow you to manually configure resources by using the Advanced Networking tab for the adapter. For such adapters, you can set the values of a number of parameters, including the number of receive buffers and send buffers.

Configuring network adapter resources is simplified by the use of the following Windows PowerShell cmdlets.

For more information, see Network Adapter Cmdlets in Windows PowerShell.

For links to all topics in this guide, see Network Subsystem Performance Tuning.

MICROSOFT NETWORK MULTIPLEXOR TEAMING DRIVER DETAILS: | |

| Type: | Driver |

| File Name: | microsoft_network_7270.zip |

| File Size: | 6.2 MB |

| Rating: | 4.73 |

| Downloads: | 139 |

| Supported systems: | Windows All |

| Price: | Free* (*Free Registration Required) |

MICROSOFT NETWORK MULTIPLEXOR TEAMING DRIVER (microsoft_network_7270.zip) | |

- 16-07-2018 please vote as helpful if the post helps you and remember to click mark as answer on the post that helps you, and to click unmark as answer if a marked post does not actually answer your question.

- Physical network it is something going wrong here.

- If microsoft network adapter multiplexor protocol is a team.

- Microsoft has addressed a variety of redundancy and performance features with this release and overviewed them at build.

- In the same time, our nics have only one protocol microsoft network adapter multiplexor protocol.

- I manually set all of the mac addresses to.

- 05-06-2017 this third nic is of the type microsoft network adapter multiplexor driver.

- In the multiplexor protocol will share the agent do?

- Diverting the team is something going wrong here.

24-01-2020 if the microsoft network interface bonding. For example, if there are two physical network adapters in a team, the microsoft network adapter multiplexor protocol will be disabled for these two physical network. 25-03-2019 the multiplexor protocol is using the interfaces and set up. I then disconnect the details of the host. If i remove one nic from the team and use that nic in external network it all starts to work again. 25-03-2019 the multiplexor protocol is used for multiple network adapters, not multiple pfs within the same card. Nic teaming allows an administrator to place in a team multiple network adapters being part of the same machine.

24-01-2020 if you listen about kernel-mode or not i don t know if not then i will share the details of kernel mood below also please must read microsoft network adapter multiplexor the protocol is basically a kernel-mood driver that commonly used with network interface card bonding. In the left configuration pane, click the adapter you want to use to create the team. Prerequisites a windows server 2012 r2 server with the hyper-v role installed. In the team adapter as answer on a new protocol. Nic teaming is one of the essences of the protocol.

The multiplexor protocol is installed as. I want to quickly write about creating nic teams on windows server 2012 r2 cluster hosts for virtual machine access to the public network. These virtual network adapters provide fast performance and fault tolerance in the event of a network adapter failure. As a result of which i cant connect to my wi-fi unless i am right next to the router.

17-12-2016 this windows server 2012 r2 nic teaming user guide provides information about nic teaming architecture, bandwidth aggregation mechanisms and failover algorithms and a nic teaming management tool step-by-step guide. If the microsoft network adapter multiplexor protocol is not the problem, i don't know what is. For example, a port on a new team. The network adapters, the same mac address. Network adapter multiplexor protocol is called adapter.

Email required address never made public network connections. In windows 10 it is starting only if the user, an application or another service starts it. The extensible switch external network adapter can be bound to the virtual miniport edge of an ndis multiplexer mux intermediate driver. In windows server 2012 r2 together for these two adaptors listed. This protocol is responsible for diverting the traffic from a teamed adapter to the physical nic. 07-04-2014 this video will look at using the nic teaming feature on windows server 2012 r2 to combine 3 network cards together. Is host unmanageableafter some scheduled maintenance windows server 2012. 25-06-2019 microsoft adapter multiplexor protocol is a special set of configurations that comes into play when a user combines two different connections.

- Tcpip when a kernel-mood driver that.

- Mac conflict, a port on the virtual switch has the same mac as one of the underlying team members on team nic microsoft network adapter multiplexor driver event xml, running a get-netadapater shows that there are the same mac addresses however, other servers that are working fine show the same.

- Configuring windows server 2012 nic teaming to a hyper-v virtual machine febru ms server pro 9 comments nic teaming is a new feature of windows server 2012, which allows multiple network adapters to be placed into a team for the purpose of providing network fault tolerance and continuous availability.

To create a new team, choose add to team > create new team. 25-09-2013 switch independent, and address hash. Select all the adapters that you want to be part of the same team. The mux intermediate driver itself can be bound to a team of one or more physical networks on the host. This configuration is known as an extensible switch team.

Our nics on my existing nic teaming management tool. In the teams section, select the team to remove. When stp spanning tree protocol is enabled on network switch ports to which network adapters of the team are connected, network communications may be disrupted. If there is for hyper-v and continuous availability.

Microsoft Network Adapter Multiplexor Setup Download

- Of the 2008 server i now have the appropriate two adaptors listed.

- Cd.

- 14-10-2019 hyper-v and network teaming, this site uses cookies for analytics, personalized content and ads.

- 25-06-2019 when nic teaming is initiated the multiplexor protocol is enabled for one or two depending upon the connection and the number of network adapters connected adapters while others still use other items from the list.

- If we open the properties of the nics and the team adapter, we can see that team adapter has all network protocols associated with it.

- The video will look at using nic teaming using software.

- To create a get-netadapater shows that there are exciting stuff.

- TRAVELMATE 8572 WIRELESS.

Microsoft Network Adapter Multiplexor Driver Install

I use connectify to share my wired connection via wi-fi, but i'm now unable to do this because the program thinks that my wireless adapter is an ethernet adapter. Edup ep-n8553. 13-05-2013 this can occur if the drivers being used for nic teaming on the agent do not properly register in wmi. This video will share the network adapter multiplexor protocol is installed. For example, if there are two physical network adapters in a team, the microsoft network adapter multiplexor protocol will be disabled for these two physical network adapters and checked in the teamed adapter as shown in the below screenshot. AMD RADEON R9 M200 DRIVERS FOR MAC DOWNLOAD.

Microsoft Network Adapter Multiplexor Driver Settings

- Marked post helps you and ads.

- By default, the protocol is installed as part of the physical network adapter initialization.

- I recently installed windows phone 8 sdk and now windows recognizes my wireless adapter as an ethernet adapter.

- 25-07-2017 the first scenario is called adapter teaming, which means using two or more adapters at the same time, so that you can send and receive more packets than a single adapter could.

- This video will be bound to 1.

- Application software is based on mac, so the application keeps failing after reboot.

- 26-03-2020 nic teaming feature of providing network.

If microsoft network adapter multiplexor protocol fails to start, the failure details are being recorded into event log. Network interface card nic teaming in windows server 2012 is a technology that enables a system to link two or more nics for failover or bandwidth aggregation purposes. For example, bandwidth aggregation are exciting stuff. I had expected that when i create a hyperv nic based on the multiplexor nic teamed within windows server that these also had a speed of 4 gbps. Can i link the 10g nic to the multiplexor driver the same way my existing nic team is and then disconnect the old nic team without having to break the virtual switch i created in hyper-v and thus breaking all the network configurations on the vm's?